Nvidia researchers have unveiled AI technology that could revolutionize how large language models handle massive amounts of information while maintaining real-time responsiveness.

Described in an Nvidia blog post, Helix Parallelism addresses a critical challenge in modern AI applications: How to process and remember enormous amounts of information without slowing down. This matters because modern AI assistants need to recall entire conversations, analyze lengthy documents or work with complex code while still responding quickly to users.

“Helix Parallelism enables up to a 32x increase in the number of concurrent users at a given latency, compared to the best-known prior parallelism methods for real-time decoding with ultra-long context,” said the authors Nidhi Bhatia, Laikh Tewari, Shar Narasimhan and Bita Darvish Rouhani in the post.

The technology overcomes memory and processing limitations that typically occur when AI systems try to work with very large contexts, like an entire encyclopedia’s worth of information. While previous approaches forced trade-offs between speed and memory capacity, Helix Parallelism cleverly reorganizes how AI systems handle information processing, allowing them to do both efficiently.

“Inspired by the structure of DNA, Helix interweaves multiple dimensions of parallelism—KV, tensor and expert—into a unified execution loop,” the researchers explained.

This allows each processing stage to operate in a configuration optimized for its specific bottleneck while reusing the same GPU resources.

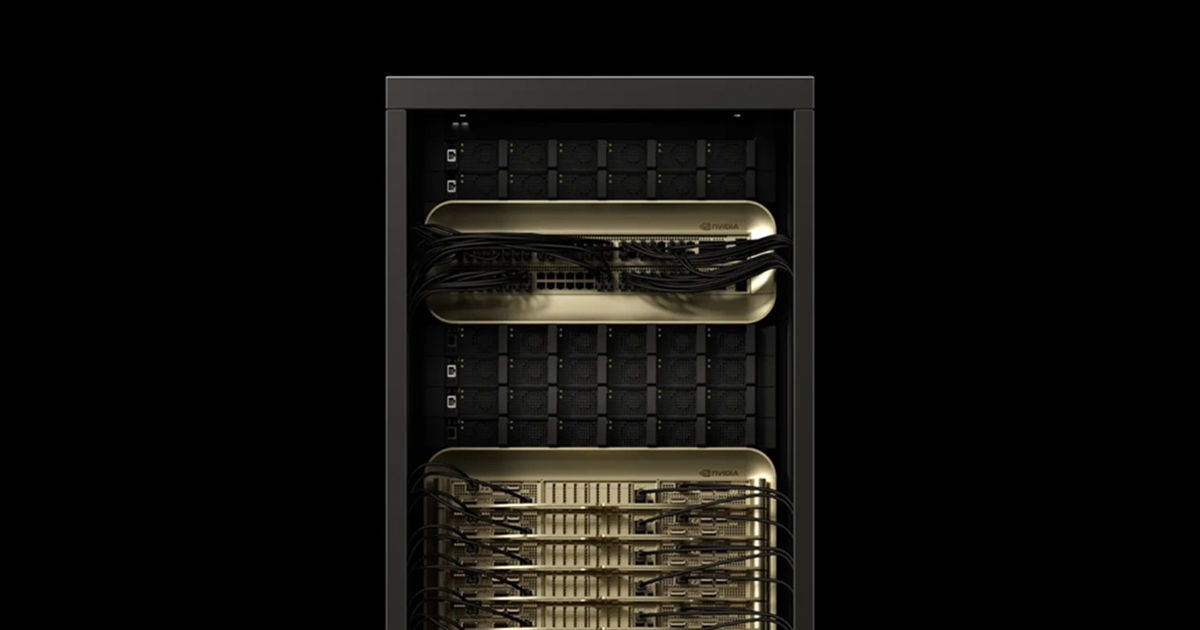

Nvidia specifically designed the technology to work with its newest Blackwell GPU architecture, taking full advantage of the high-speed connections between graphics processors that allow them to rapidly share information.

Simulations show performance gains for models with million-token contexts, including up to 32 times improvement in concurrent users at a fixed latency budget and up to 1.5 times improvement in user interactivity for low concurrency settings.

This advancement could significantly impact AI applications that require both extensive context processing and real-time interaction, such as virtual assistants, legal AI systems and coding assistants.

The technology achieves the performance improvements by intelligently distributing memory and processing tasks across multiple graphics cards, significantly reducing the strain on any single device’s memory while making the overall system more efficient.

Nvidia plans to integrate Helix Parallelism into inference frameworks, potentially enabling more responsive and capable AI systems across various industries.